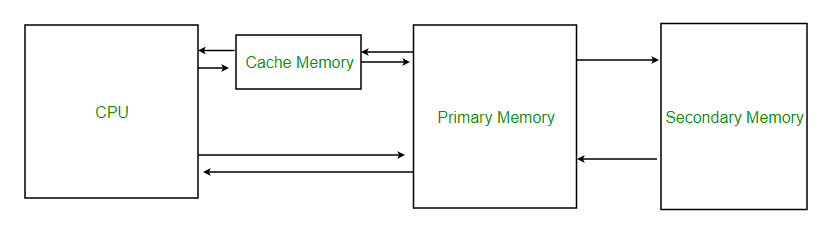

Cache memory is a small, high-speed storage area in a computer. The cache is a smaller and faster memory that stores copies of the data from frequently used main memory locations. There are various independent caches in a CPU, which store instructions and data. The most important use of cache memory is that it is used to reduce the average time to access data from the main memory.

By storing this information closer to the CPU, cache memory helps speed up the overall processing time. Cache memory is much faster than the main memory (RAM). When the CPU needs data, it first checks the cache. If the data is there, the CPU can access it quickly. If not, it must fetch the data from the slower main memory.

Understanding cache memory and its role in computer architecture is crucial for excelling in exams like GATE, where computer organization is a core topic. To deepen your understanding and enhance your exam preparation, consider enrolling in the GATE CS Self-Paced Course . This course offers in-depth coverage of computer architecture, including detailed explanations of cache memory and its optimization, helping you build the expertise needed to perform well in your exams.

When the processor needs to read or write a location in the main memory, it first checks for a corresponding entry in the cache.

The performance of cache memory is frequently measured in terms of a quantity called Hit ratio.

Hit Ratio(H) = hit / (hit + miss) = no. of hits/total accesses

Miss Ratio = miss / (hit + miss) = no. of miss/total accesses = 1 - hit ratio(H)

We can improve Cache performance using higher cache block size, and higher associativity, reduce miss rate, reduce miss penalty, and reduce the time to hit in the cache.

There are three different types of mapping used for the purpose of cache memory which is as follows:

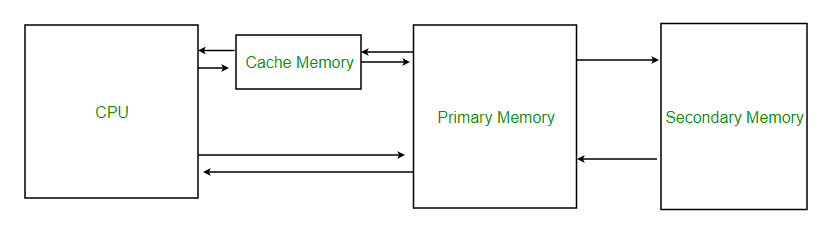

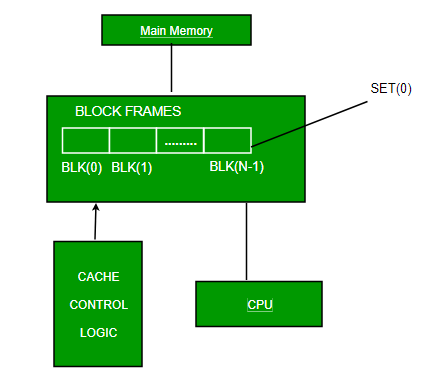

The simplest technique, known as direct mapping, maps each block of main memory into only one possible cache line. or In Direct mapping, assign each memory block to a specific line in the cache. If a line is previously taken up by a memory block when a new block needs to be loaded, the old block is trashed. An address space is split into two parts index field and a tag field. The cache is used to store the tag field whereas the rest is stored in the main memory. Direct mapping`s performance is directly proportional to the Hit ratio.

i = j modulo m

where

i = cache line number

j = main memory block number

m = number of lines in the cache

For purposes of cache access, each main memory address can be viewed as consisting of three fields. The least significant w bits identify a unique word or byte within a block of main memory. In most contemporary machines, the address is at the byte level. The remaining s bits specify one of the 2 s blocks of main memory. The cache logic interprets these s bits as a tag of s-r bits (the most significant portion) and a line field of r bits. This latter field identifies one of the m=2 r lines of the cache. Line offset is index bits in the direct mapping.

Direct Mapping – Structure

In this type of mapping, associative memory is used to store the content and addresses of the memory word. Any block can go into any line of the cache. This means that the word id bits are used to identify which word in the block is needed, but the tag becomes all of the remaining bits. This enables the placement of any word at any place in the cache memory. It is considered to be the fastest and most flexible mapping form. In associative mapping, the index bits are zero.

Associative Mapping – Structure

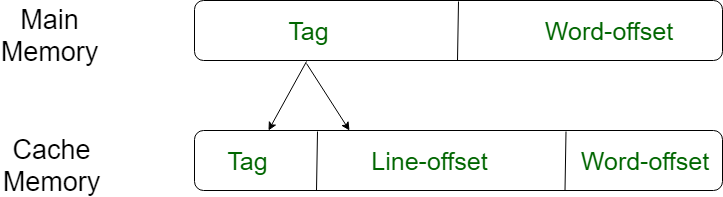

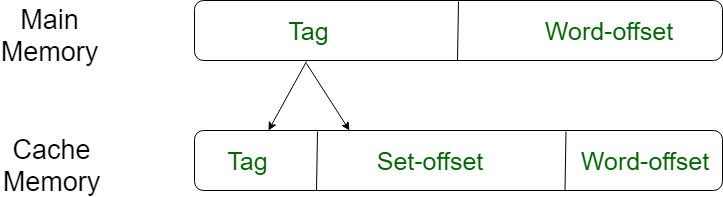

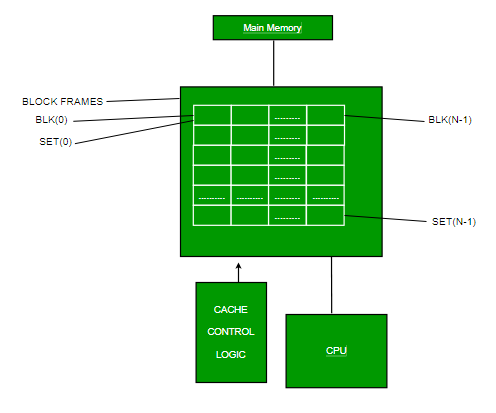

This form of mapping is an enhanced form of direct mapping where the drawbacks of direct mapping are removed. Set associative addresses the problem of possible thrashing in the direct mapping method. It does this by saying that instead of having exactly one line that a block can map to in the cache, we will group a few lines together creating a set . Then a block in memory can map to any one of the lines of a specific set. Set-associative mapping allows each word that is present in the cache can have two or more words in the main memory for the same index address. Set associative cache mapping combines the best of direct and associative cache mapping techniques. In set associative mapping the index bits are given by the set offset bits. In this case, the cache consists of a number of sets, each of which consists of a number of lines.

Relationships in the Set-Associative Mapping can be defined as:

m = v * k

i= j mod v

where

i = cache set number

j = main memory block number

v = number of sets

m = number of lines in the cache number of sets

k = number of lines in each set

Set-Associative Mapping – Structure

Here are some of the applications of Cache Memory.

In conclusion, cache memory plays a crucial role in making computers faster and more efficient. By storing frequently accessed data close to the CPU , it reduces the time the CPU spends waiting for information. This means that tasks are completed quicker, and the overall performance of the computer is improved. Understanding cache memory helps us appreciate how computers manage to process information so swiftly, making our everyday digital experiences smoother and more responsive.

Cache memory is important because it helps speed up the processing time of the CPU by providing quick access to frequently used data, improving the overall performance of the computer.

Cache memory works by storing copies of frequently accessed data from the main memory. When the CPU needs data, it first checks the cache. If the data is found (a “cache hit”), it is quickly accessed. If not (a “cache miss”), the data is fetched from the slower main memory.

Cache memory is built into the CPU or located very close to it, so it cannot be upgraded separately like RAM. To get more or faster cache, you would need to upgrade the entire CPU.

Yes, cache memory is volatile, meaning it loses all stored data when the computer is turned off, just like RAM.